AI for Temporal Data

Temporal scientific data, characterized by its sequential nature and complexity, is prevalent across various scientific disciplines, including astronomy, physics, and climate science. The primary challenge in this domain is the sheer volume and complexity of data, which makes classical analysis impractical and often causes significant insights to be overlooked. Existing approaches often fall short in handling the high dimensionality and overwhelming noise inherent in such data. Moreover, conventional methods frequently struggle to capture the long-range dependencies crucial for accurate modeling and prediction.

Basic Idea:

- Leveraging advanced foundation models for long temporal data sequence modeling, especially for low signal-to-noise ratio (SNR) scenarios.

- Developing a universal and robust framework for modeling different types of signals in the temporal scientific data.

- Developing synthetic data simulation software for large-scale model training with on-the-fly data generation.

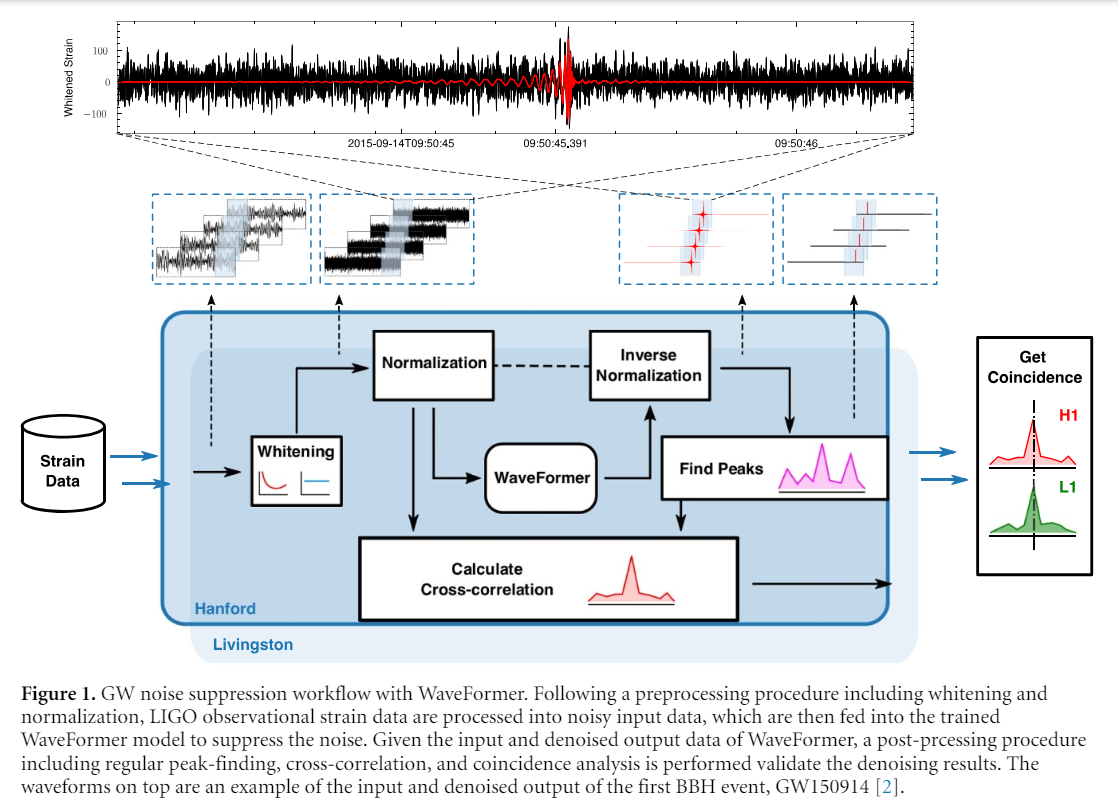

WaveFormer: transformer-based denoising method for gravitational-wave data

Paper Link: https://iopscience.iop.org/article/10.1088/2632-2153/ad2f54

With the advent of gravitational-wave astronomy and the discovery of more compact binary coalescences, data quality improvement techniques are desired to handle the complex and overwhelming noise in gravitational wave (GW) observational data. Though recent machine learning-based studies have shown promising results for data denoising, they are unable to precisely recover both the GW signal amplitude and phase. To address such an issue, we develop a deep neural network centered workflow, WaveFormer, for significant noise suppression and signal recovery on observational data from the Laser Interferometer Gravitational-Wave Observatory (LIGO). The WaveFormer has a science-driven architecture design with hierarchical feature extraction across a broad frequency spectrum. As a result, the overall noise and glitch are decreased by more than one order of magnitude and the signal recovery error is roughly 1% and 7% for the phase and amplitude, respectively. Moreover, on 75 reported binary black hole (BBH) events of LIGO we obtain a significant improvement in inverse false alarm rate. Our work highlights the potential of large neural networks in gravitational wave data analysis and, while primarily demonstrated on LIGO data, its adaptable design indicates promise for broader application within the International Gravitational-Wave Observatories Network (IGWN) in future observational runs.

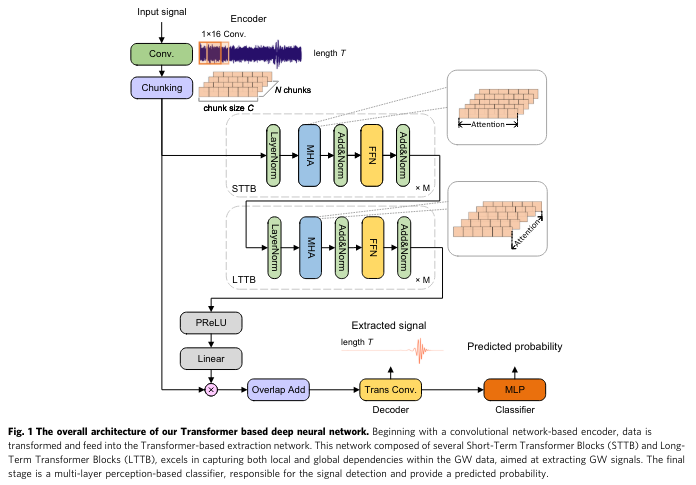

Space-based gravitational wave signal detection and extraction with deep neural network

Paper Link: https://www.nature.com/articles/s42005-023-01334-6

Space-based gravitational wave (GW) detectors will be able to observe signals from sources that are otherwise nearly impossible from current ground-based detection. Consequently, the well established signal detection method, matched filtering, will require a complex template bank, leading to a computational cost that is too expensive in practice. Here, we develop a high-accuracy GW signal detection and extraction method for all space-based GW sources. As a proof of concept, we show that a science-driven and uniform multi-stage self-attention-based deep neural network can identify synthetic signals that are submerged in Gaussian noise. Our method exhibits a detection rate exceeding 99% in identifying signals from various sources, with the signal-to-noise ratio at 50, at a false alarm rate of 1%. while obtaining at least 95% similarity compared with target signals. We further demonstrate the interpretability and strong generalization behavior for several extended scenarios.

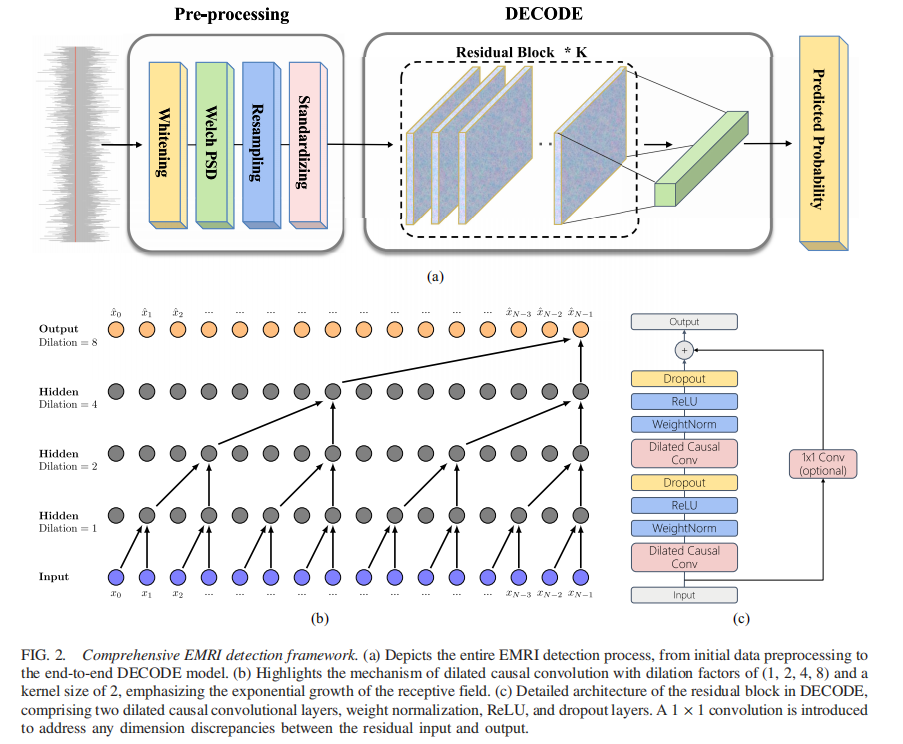

Dilated convolutional neural network for detecting extreme-mass-ratio inspirals

Paper Link: https://journals.aps.org/prd/abstract/10.1103/PhysRevD.109.084054

The detection of extreme-mass-ratio inspirals (EMRIs) is intricate due to their complex waveforms, extended duration, and low signal-to-noise ratio (SNR), making them more challenging to be identified compared to compact binary coalescences. While matched filtering-based techniques are known for their computational demands, existing deep learning-based methods primarily handle time-domain data and are often constrained by data duration and SNR. In addition, most existing work ignores time delay interferometry (TDI) and applies the long-wavelength approximation in detector response calculations, thus limiting their ability to handle laser frequency noise. In this study, we introduce dilated convolutional neural network for detecting extreme-mass-ratio inspirals (DECODE), an end-to-end model focusing on EMRI signal detection by sequence modeling in the frequency domain. Centered around a dilated causal convolutional neural network, trained on synthetic data considering TDI-1.5 detector response, DECODE can efficiently process a year’s worth of multichannel TDI data with an SNR of around 50. We evaluate our model on one-year data with accumulated SNR ranging from 50 to 120 and achieve a true positive rate of 96.3% at a false positive rate of 1%, keeping an inference time of less than 0.01 seconds. With the visualization of three showcased EMRI signals for interpretability and generalization, DECODE exhibits strong potential for future space-based gravitational wave data analyses.

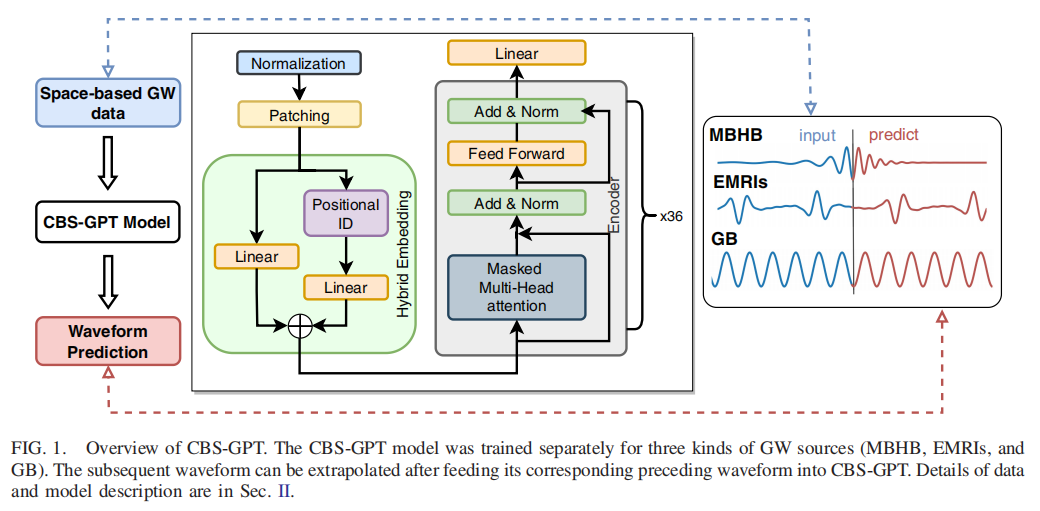

Compact binary systems waveform generation with a generative pretrained transformer

Paper Link: https://journals.aps.org/prd/abstract/10.1103/PhysRevD.109.084017

Space-based gravitational wave (GW) detection is one of the most anticipated GW detection projects in the next decade, which promises to detect abundant compact binary systems. At present, deep learning methods have not been widely explored for GW waveform generation and extrapolation. To solve the data processing difficulty and the increasing waveform complexity caused by the detector’s response and second-generation time-delay interferometry (TDI 2.0), an interpretable pretrained large model named Compact Binary Systems Waveform Generation with Generative Pretrained Transformer (CBS-GPT) is proposed. For compact binary system waveforms, three models were trained to predict the waveforms of massive black hole binaries, extreme mass-ratio inspirals, and galactic binaries, achieving prediction accuracies of at most 99%, 91%, and 99%, respectively. The CBS-GPT model exhibits notable generalization and interpretability, with its hidden parameters effectively capturing the intricate information of waveforms, even with the complex instrument response and a wide parameter range. Our research demonstrates the potential of large models in the GW realm, opening up new opportunities and guidance for future researches such as complex waveforms generation, gap completion, and deep learning model design for GW science.